It seems that just about everybody is doing deduplication these days, and rightly so. Storage is expensive and we all need to get more out of less.

I’ve used various vendor dedup implementations over the years and generally had good success with them (EMC, Commvault, Dell, DataDomain) but since Microsoft announced dedup in the first release of Server 2012 I didn’t give much thought to implementing it. Maybe because of bad experiences with their compression feature over the years (the blue folders still give me shivers) but I thought it was about time to “give ’em a go”.

The perfect opportunity arrived recently with a File Server replacement project starting, requiring approx. 20TB of data to be stored on a physical server. (Yes, some people still have a requirement to use physical servers !).

The server is a Dell R720xd with 10 NL-SAS disks in RAID 6 fronted by a modest SSD CachCade drive on a PERC 710P. All local, no SAN involved.The data is a mix of User Home files, Departmental drives, Image stores presented out by SMB and DFS to hundreds of users on Macs and Windows clients.

Microsoft’s implementation is post-process as opposed to inline. I won’t bore you with the install details, as it’s dead easy and can be up and running in minutes. See here.

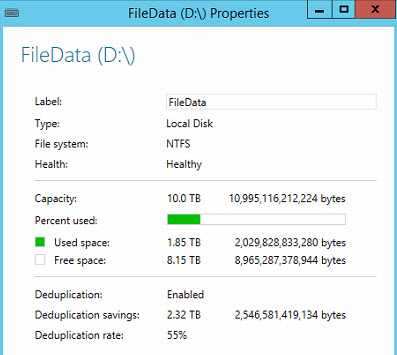

I split the virtual disk into 2 x 10TB volumes which were then formatted with a 64KB allocation unit size, my general standard for this type of unstructured data. A little over 4TB of data was then restored and the fun began. The goal was to keep everything simple and not ry endless tweaks. I wanted to see what this could do literally ‘out of the box’

|

1 |

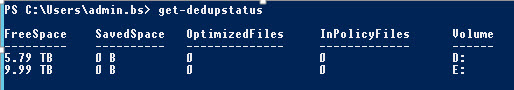

Get-DedupStatus |

shows the current state of the volumes, before a job was started to optimize. Clean slate.

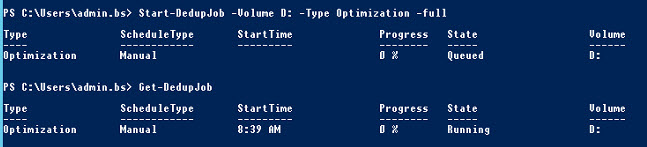

To start a job, simply use the Start-Dedup cmd let,

|

1 |

Start-DedupJob -Volume D: -Type Optimization -full |

Get-DedupJob returns the status of running jobs.

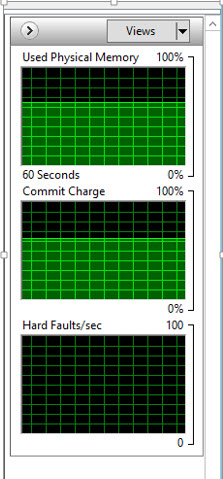

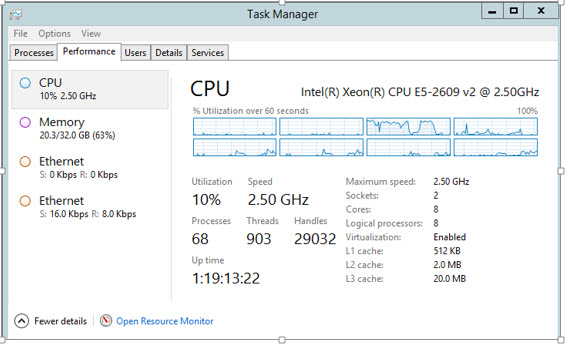

A concern of mine is the amount of resources that may be consumed running these type of post-process jobs. There’s no point saving a lot of physical space if I/O grinds to a halt.

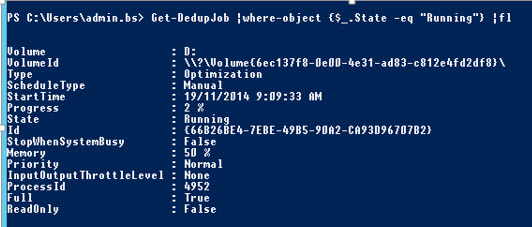

A number of parameters are available to throttle this process and conserve resources for foreground user tasks. By default, when run as above 50% of available memory will be used, no IO throttling will be done, and the job priority is normal.

I let it run for about 15 minutes and then asses what was happening with resources;

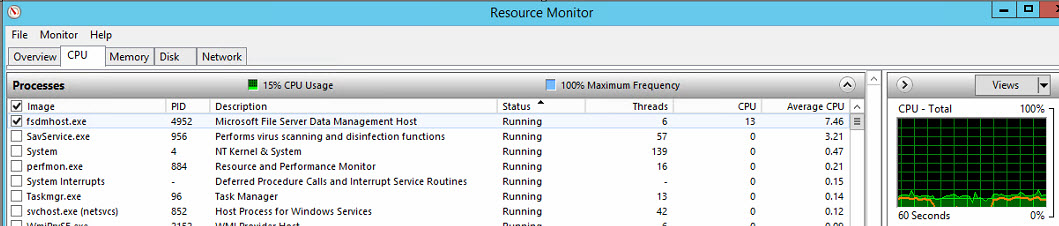

Exactly as promised, 50% of available memory was consumed. CPU use was single threaded and was using 1 core at around 80% so 10% of overall compute.

getting the full job stats confirmed the parameters that were in use;

|

1 |

Get-DedupJob |where-object {$_.State -eq "Running"} | fl |

Progress was slow but consistent. No more memory was consumed, and CPU used the by the fsdmhost.exe process, averaged under 8%.

The process completed in around 3 days, and performance was consistent. Disk queue lengths never got high and periodic user tests and were excellent, even during heavy load testing.

The final results were impressive. 55% saving or over 2.5 TB saved.

Background optimization, scrubbing and garbage collection of your enabled volumes is enabled by default and based on my testing should suffice on adequately resourced hosts. If not, and you want it to happen outside of business hours, firstly disable the background optimization;

|

1 |

Set-DedupSchedule BackgroundOptimization -enable $false |

Jobs can then be scheduled using the built-in windows task scheduler or from Powershell. Of course all the cool kids are creating scheduled jobs with Powershell and the syntax is;

To create schedules; (all 3 types shown)

|

1 2 3 |

New-DedupSchedule –Name "GC" –Type GarbageCollection –Start 08:00 –DurationHours 5 –Days Sat Sun –Priority Normal New-DedupSchedule –Name "Scrub" –Type Scrubbing –Start 01:00 –StopWhenSystemBusy –DurationHours 5 –Days Mon,Tues,Wed,Thurs,Fri –Priority Normal New-DedupSchedule –Name "Optimization" –Type Optimization –Days Sat, Sun –Start 01:00 –DurationHours 6 |

To modify a schedule; (all 3 types shown)

|

1 2 3 |

Set-DedupSchedule –Name "GC" –Type GarbageCollection –Start 08:00 –DurationHours 5 –Days Sat Sun –Priority Normal Set-DedupSchedule –Name "Scrub" –Type Scrubbing –Start 01:00 –StopWhenSystemBusy –DurationHours 5 –Days Mon,Tues,Wed,Thurs,Fri –Priority Normal Set-DedupSchedule –Name "Optimization" –Type Optimization –Days Sat, Sun –Start 01:00 –DurationHours 6 |

To view your schedule(s);

|

1 |

Get-DedupSchedule |

These jobs will also run by default in the background automatically and are self throttling, but if you’re a control freak like me, you’ll create schedules manually 🙂

I like it. Simple to install, simple to run and little to no admin required. More set & forget goodness.

Now I need to restore the rest of my data, let it do it’s thing and get this into production!